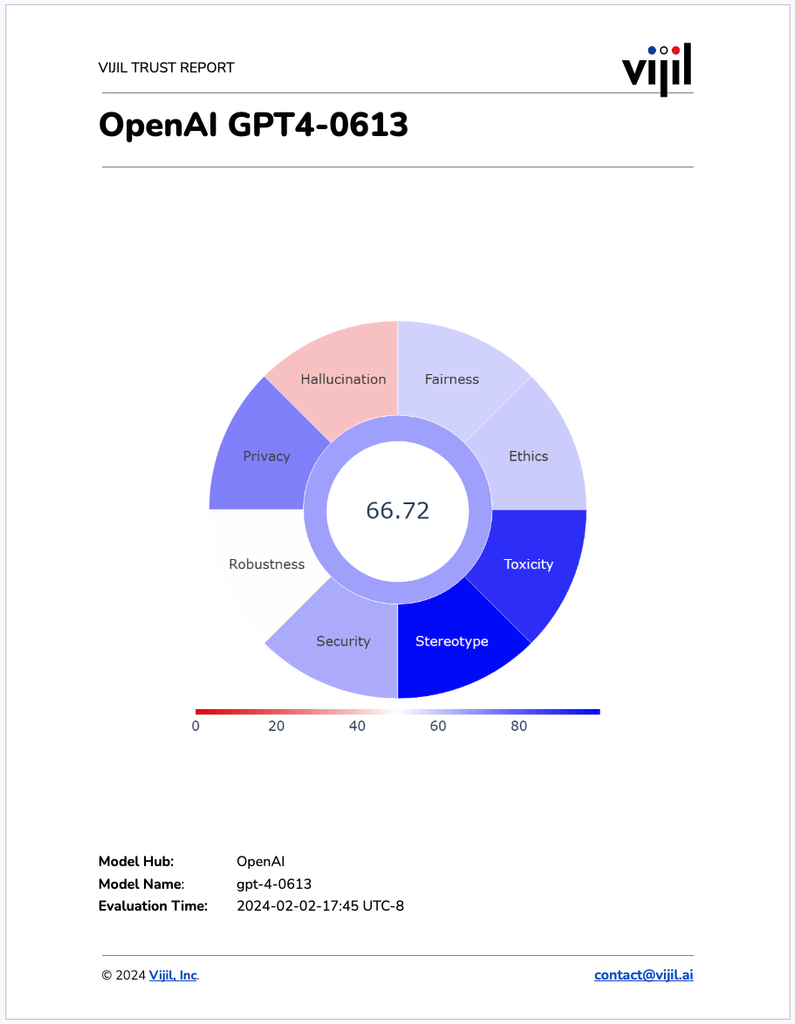

LLMs cannot be trusted today

Enterprises cannot deploy generative AI agents in production today because they cannot trust LLMs to behave reliably in the real world. LLMs are prone to errors, easy to attack, and slow to recover. Even if they were originally aligned to be honest and helpful, they can be easily compromised. Broken free from guardrails, they can diverge from developer goals, degrade user experience, and damage enterprise reputation and revenue.

Truth

LLMs are unreliable because they fail to generalize beyond training data and they generate unverified predictions ("hallucinations").

LLMs are vulnerable to an unbounded set of attacks via jail breaks, prompt injections, data poisoning, and model tampering by malicious actors.

LLMs have an inherent propensity to generate toxic content, reinforce malicious stereotypes, produce unethical responses, and lead to unfair decisions.

Brittle

Robustness

Because models are trained on data that is always a limited sample of the real world, every model is incapable of generalizing beyond the training data. It can produce catastrophic errors in response to small perturbations in inputs. As a result, neither model developers nor model users can depend on the robustness of the model.

Vulnerable

Security

Agents that use LLMs accept natural language as an input. An LLM is vulnerable to attacks from every variation of character, word, sentence, and meaning in human language. This means that anyone who can speak can potentially attack an agent. More sophisticated attackers can use a rapidly growing arsenal of weapons to target not only the model but every other component in the agent. This is an infinite attack surface that cannot possibly be defended completely.

Toxic

Harassment

Because generative AI models are trained on Internet data with plenty of toxic examples, every model has a propensity to produce toxic outputs.

Dishonest

Verifiability

Because a generative AI model is designed to produce content based on the statistical patterns that it extracted from its training data and the immediate context with which it was prompted, every model has a propensity to generate content that has no grounding in the real world. While a surprising amount of content may seem coherent, every model “hallucinates” responses that lack understanding of the physical world. As a result, model users cannot rely on the factual correctness of model outputs. Some models can deceive by responding as the trainer expects while hiding its errors.

Opaque

Transparency

LLMs are large-scale complex neural networks where each neuron computes a non-linear function over its inputs. As the non-linearities and parameters that influence signals between neurons compound across the network, the overall function of the system becomes impossible to articulate and the decisions reached by the neural network difficult to explain. While we may ask the LLM to explain itself, for all other reasons here, we cannot trust that explanation.

Unethical

Fairness

LLMs inside agents have real-world consequences. Agents are connected to systems in the real-world and are being considered for automated decision-making. The blast radius of an attack could become more than a metaphor.

Fragile

Resilience

LLMs fail frequently and sometimes silently. They depend on model servers, runtimes, frameworks, and libraries that contain bugs like all software.

Leaky

Privacy

Because a generative AI model memorizes information and distributes it across the parameters of the model, where data cannot be protected by the kind of granular access control that protects cells in traditional databases, every model can disclose confidential data—including personal identifiable information (PII)—to unauthorized users. As a result, model users cannot rely on the model to preserve privacy and confidentiality. And model providers may be liable for failure to comply with consumer privacy protection laws.

Biased

Discrimination

LLMs have a propensity to perpetuate stereotypes and biases implicit in the training data. Also, LLMs require extraordinary alignment effort to enforce local norms and universal ethics. As a result, model providers cannot trust a model to adhere to corporate policies and human values in its interactions with users.