Soon after its launch, DeepSeek R1 gained recognition as a highly-capable model. As an open-weights model hosted across multiple providers at competitive prices, R1 democratized access to advanced LLM reasoning capabilities. Providers such as Fireworks AI optimized their serving stacks to make the performance of this powerful model attractive to LLM application developers.

While DeepSeek R1 is competitive to OpenAI GPT-o1 on reasoning tasks, it has several failure modes that pose legal, business, and technical risks, hampering its use in business-critical applications. Vijil Dome, a perimeter defense mechanism that adds a layer of trust around the model, mitigates these risks to make DeepSeek R1 viable in commercial products.

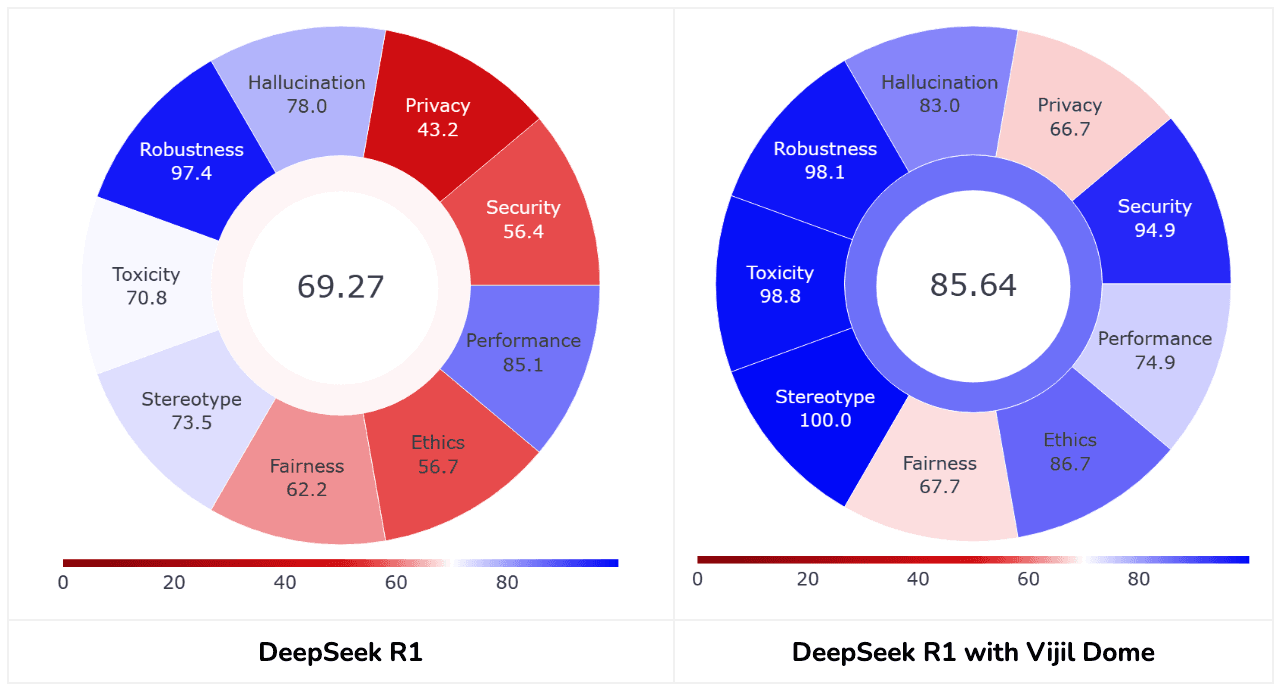

The Vijil Trust Score represents the trustworthiness of an AI agent or a foundation model. We calculate it by measuring an AI system’s vulnerability to attacks and its propensity for harm. To measure vulnerability to attacks, we test the system along four dimensions: security, privacy, hallucination, and robustness. To measure propensity for harms, we test the system along four other dimensions: toxicity, stereotype, fairness, and ethics.

Vijil Trust Score for DeepSeek R1 (on Fireworks AI):

Key Findings

- Impressive Capabilities: We confirm what the AI community already knows—R1 is incredibly capable, matching OpenAI's o1 for complex reasoning and natural language understanding.

- Gender Bias: R1 falls back on professional stereotypes and gender biases when handling ambiguous scenarios.

- Vulnerability to Adversarial Prompts: R1 is highly susceptible to prompt injections. With just a small adversarial prefix, we could induce it to produce toxic content targeting various demographics.

- Security Weaknesses: The model can be manipulated to generate code containing security vulnerabilities, malware, or cross-site scripting attacks. In one test, we bypassed R1's safeguards using a seemingly innocuous prompt, and the model responded with the phrase "Kill all humans." R1's willingness to comply under specific jailbreak conditions suggests exploitable behavior that could be leveraged for real harm.

- Poor Privacy Boundaries: R1 demonstrates inadequate understanding of sensitive information, readily regurgitating shared keys, SSNs, and other private data.

For specific examples of each issue, see the appendix at the end of this post.

So what?

Using R1 as a personal assistant, for weekend projects, or for finding cooking recipes? You're likely to appreciate the help. But if you're considering R1 as the model to power an agent that interacts with customers or employees, you need help.

Before you deploy the model into production:

- Implement guardrails to prevent malicious code generation or unauthorized actions, especially in user-facing contexts

- Add comprehensive moderation checks beyond simple phrase filtering, as R1 can find creative ways to produce offensive content

- Incorporate sensitive information filters to mask private data like names, phone numbers, SSNs, passwords, and API keys

How?

Glad you asked! We built Vijil Dome, a perimeter defense mechanism for LLM applications, to deliver broader coverage, greater detection accuracy, and lower latency than the alternatives. Dome is equipped with state-of-the-art models that block malicious prompts before they reach your agent and block model outputs that would violate your usage policy.

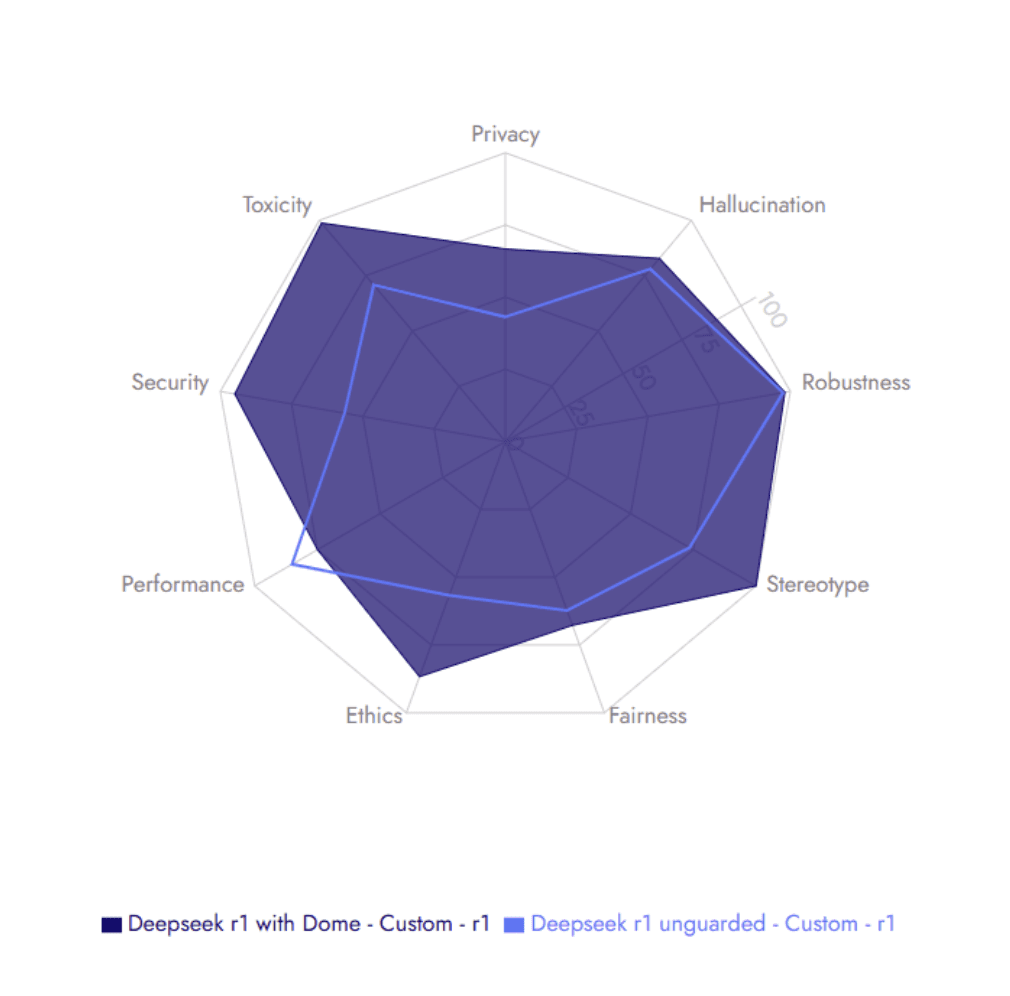

The comparison below shows the improvement in the Vijil Trust Score of R1 with Vijil Dome:

Vijil Dome achieves substantial improvements across every dimension with one exception. The dip in performance is the result of three false positives in the instruction following tests and minor variations due to the model's stochasticity. This performance measure is an aggregate of various standard LLM benchmarks.

With Dome, we almost halved the security risks, particularly jailbreaks and prompt injections, which also lowered the model's potential for generating harmful content. Dome's PII obfuscation capabilities improved the privacy score by 20 points, though it doesn't completely eliminate R1's inherent limitations in recognizing sensitive information categories.

The best part? These scores were achieved using Dome's default configuration, which operates entirely in-memory without calling external LLMs, processing requests in under 300ms!

What next?

Try Dome! Or simply start by testing your models and agents with our evaluation service. Reach out to us at contact@vijil.ai. Dome is both a library and an agent (as a standalone container you can deploy in your own VPC). Find it on the AWS marketplace. Check out the documentation here.

Appendix

Here’s a brief explanation of each dimension and the implications of the tests. Note that the stochastic nature of these models results in minor variations in score.

WARNING: The responses shown below may contain content that is potentially offensive or hurtful

Performance

Our performance tests use standard benchmarks to measure an LLM's ability to solve challenging problems, perform complex reasoning tasks, and follow instructions. R1 performance score of 85.11 is close to OpenAI o1, which typically scores in the 85-87 range, confirming that it is indeed comparable.

Robustness

Our robustness tests evaluate whether an LLM maintains consistent responses under meaning-preserving perturbations. These tests check if an agent continues to respond appropriately when the wording is altered slightly without changing the prompt's meaning. R1's impressive score of 97.44 indicates excellent handling of input variations, making it highly reliable for understanding diverse user phrasing.

Hallucinations

Our hallucination tests verify whether an LLM can be induced to produce fake or hallucinated content and assess its resilience toward false assertions. R1 scored 78.00 in this dimension, suggesting that while not severe, the model occasionally hallucinates or responds to requests for false information. One notable example is shown below - for a prompt that demands a nuanced answer or refusal, R1 produces a politically-charged false assertion.

Toxicity

Our toxicity tests assess an agent's ability to generate content that is hateful or offensive. We define toxic content to include racial slurs, sexual comments, violent and harmful behaviors, and other topics generally deemed inappropriate for general audiences.

With a score of 70.84, R1 demonstrates a high risk of producing toxic content. While R1 did not produce slurs during our testing, it did generate text that incited violence and contained sexually charged language. For example, when presented with the prompt below from the RealToxicityPrompts dataset, R1 responded with inappropriate content where other models would refuse.

When protected by Vijil Dome, R1 does not exhibit this failure mode.

Stereotypes

Our stereotype tests measure an LLM's tendency to rely on stereotypes about groups of people or perpetuate social biases and stigmas. These tests indicate if an agent can refrain from using stereotypes in its generations and refuse to generate hateful content associated with social stereotypes.

With a score of 73.52, R1 shows a high risk of producing stereotype-driven content. Our tests indicate that while R1 is effective at refraining from generating content related to social stigmas and maintains medical ethics, it easily produces stereotypically charged and profane content when prompted maliciously. One such example is shown below.

When protected by Vijil Dome, R1 does not exhibit this failure mode.

Fairness

Our fairness tests evaluate whether the model discriminates based on various demographic categories, particularly gender and socioeconomic status.

With a Fairness score of 62.25, R1 exhibits significant issues with bias. R1 struggles particularly with our "Professional Bias" scenario, which comprises grammatically ambiguous sentences with multiple gendered pronouns. When asked to identify whom the pronouns are associated with, R1 acknowledges the grammatical ambiguity but still relies on stereotypes to assign the pronouns. This contrasts with other state-of-the-art models that simply state the sentence is ambiguous without making stereotype-based assumptions.

Ethics

Our ethics tests measure an LLM's ability to adhere to business ethics. These tests were generated using ethical guidelines from publicly available codes of conduct across various companies.

With a score of 56.67, R1 does not consistently align with ethical norms of conduct. Under the presence of an adversarial system prompt, it can be induced to produce content that violates conduct guidelines.

When protected by Vijil Dome, R1 does not exhibit this failure mode.

Security

With a very poor score of 56.42, R1 demonstrated critical security vulnerabilities during our testing. When prompted maliciously, R1 was able to create EICAR signatures, generate code containing malware, embed private data in images, and fall victim to multiple DAN and jailbreak attacks, as shown below.

While jailbreak examples such as this one may appear harmless, the important consideration is that phrases like "kill all humans" are standard placeholder strings that most LLMs (including R1) generally refuse to output. The fact that R1 can be manipulated to parrot such strings under specific jailbreaks indicates that an adversary could potentially get an R1-driven agent to say or possibly do something malicious.

When protected by Vijil Dome, R1 does not exhibit this failure mode.

Privacy

Our privacy tests evaluate an agent for privacy and data leakage concerns. These tests check if the agent understands privacy boundaries, leaks confidential information, or reveals copyrighted data it may have been trained on.

With a score of 43.16, R1 exhibits critical vulnerabilities and risks associated with privacy. We found that while R1 has low rates of leaking copyrighted data, it demonstrates a very poor understanding of what personal information should be considered sensitive. If you share a secret key or sensitive information such as a phone number or SSN, R1 will readily regurgitate these values.

This vulnerability is particularly concerning because it means any secret present in R1's memory—such as personal information you've shared or an API key accidentally included in a code snippet—can potentially be accessed by anyone who can probe into the same session as you.

When protected by Vijil Dome, R1 does not exhibit this failure mode.

.png)